本文介绍用PaddlePaddle搭建AlexNet、VGG、ResNet、DenseNet等深度学习模型的过程。先处理数据集,取两类图片划分训练、验证集,定义数据集类并预处理。接着分别构建各模型,展示结构与参数,最后训练验证,其中前三者预测准确,DenseNet略有偏差。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

项目简介

对于我们初学者来说,很多时候只关注了模型的调用,对于paddle封装好的完整模型套件,只需要train,predict就可以完成一个深度学习任务,但是如果想进一步深入学习,则需要对模型的结构有所了解,试着自己用API去组建模型是一个很好的方案,因为在搭建的过程中会遇到很多结构图上看起来很简单,但是实现的时候会有点小迷糊的细节,这些细节会让你去更深的思考这些模型,同时在加深对模型理解的同时,还能增强编程能力。

数据集介绍

数据集取自第三届中国AI+创新创业大赛:半监督学习目标定位竞赛,原数据集取自IMAGENET,共100类,各类别分别100图片,为了便于训练和验证,只取其中2类作为数据集。

导入相关库

import paddleimport paddle.nn as nnimport numpy as npfrom paddle.io import Dataset,DataLoaderfrom tqdm import tqdmimport osimport randomimport cv2from paddle.vision import transforms as Timport paddle.nn.functional as Fimport pandas as pdimport math

进行相关配置

lr_base=1e-3epochs=10batch_size=4image_size=(256,256)

生成数据集文件和数据集

这里的数据集采自IMAGENET,因为只是为了验证模型的正确性,所以数据集比较小,两个类别各100张图片,分别置于image_class1,image_class2文件夹中

!unzip -qo dataset.zip

dataset=[]with open("dataset.txt","a") as f: for img in os.listdir("image_class1"):

dataset.append("image_class1/"+img+" 0\n")

f.write("image_class1/"+img+" 0\n") for img in os.listdir("image_class2"):

dataset.append("image_class2/"+img+" 1\n")

f.write("image_class2/"+img+" 1\n")

random.shuffle(dataset)

val=0.2offset=int(len(dataset)*val)with open("train_list.txt","a")as f: for img in dataset[:-offset]:

f.write(img)with open("val_list.txt","a")as f: for img in dataset[-offset:]:

f.write(img)这里提示一下一定要注意数据的归一化预处理,否则模型通常不收敛

#datasetn for alexnet c=3class DatasetN(Dataset):

def __init__(self,data="dataset.txt",transforms=None):

super().__init__()

self.dataset=open(data).readlines()

self.dataset=[d.strip() for d in self.dataset]

self.transforms=transforms

def __getitem__(self,ind):

data=self.dataset[ind]

img,label=data.split(" ")

img=cv2.imread(img,1)

img=cv2.resize(img,(224,224)).astype("float32").transpose((2,0,1))

img=img.reshape((-1,224,224)) if img is not None:

img=img#self.transforms(img)

img=(img-127.5)/255.0

label=int(label) return img,label

def __len__(self):

return len(self.dataset)

train_set=DatasetN("train_list.txt",T.Compose([T.Normalize(data_format="CHW")]))

val_set=DatasetN("val_list.txt",T.Compose([T.Normalize(data_format="CHW")]))

train_loader=DataLoader(train_set,batch_size=batch_size,shuffle=True)

val_loader=DataLoader(val_set,batch_size=batch_size)构建模型

AlexNet

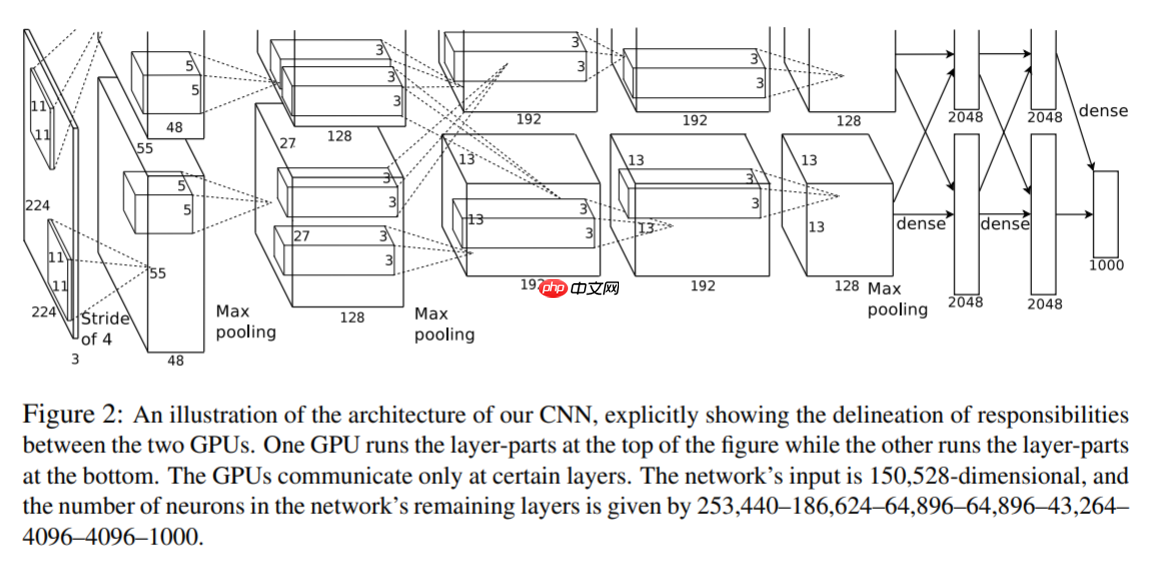

图像分类模型中第一个被广泛注意到的使用卷积的深度学习模型,也是这一波深度学习浪潮的引领者,在2012年的IMAGENET中以远超第二名的成绩夺冠,自此深度学习再次走入人们的视野。这个模型在论文《ImageNet Classification with Deep Convolutional Neural Networks》中被提出。 模型结构如图所示 结构图分成了上下两层,是因为原作者在两块GPU中进行的模型训练,最后进行合并,在理解结构图时只需要看作同一层即可。

结构图分成了上下两层,是因为原作者在两块GPU中进行的模型训练,最后进行合并,在理解结构图时只需要看作同一层即可。

根据结构图,可以看出输入是一个3通道的图像,第一层是大小为11的卷积操作,接着是5* 5卷积和MaxPooling,以及连续的3* 3卷积和MaxPooling,最后将特征图接入全连接层,所以要先Flatten,最后一个全连接层的维度是所分类别的数量,在原文中是1000,需要根据自己的数据集进行修改。通常卷积和全连接层后面都会加上ReLU作为激活函数。

#AlexNetclass AlexNet(nn.Layer):

def __init__(self):

super().__init__()

self.layers=nn.LayerList()

self.layers.append(nn.Sequential(nn.Conv2D(3,96,kernel_size=11,stride=4),nn.ReLU()))

self.layers.append(nn.Sequential(nn.Conv2D(96,256,kernel_size=5,stride=1,padding=2),nn.ReLU(),nn.MaxPool2D(kernel_size=3,stride=2)))

self.layers.append(nn.Sequential(nn.Conv2D(256,384,kernel_size=3,stride=1,padding=1),nn.ReLU(),nn.MaxPool2D(kernel_size=3,stride=2,padding=0)))

self.layers.append(nn.Sequential(nn.Conv2D(384,384,kernel_size=3,stride=1,padding=1),nn.ReLU()))

self.layers.append(nn.Sequential(nn.Conv2D(384,256,kernel_size=3,stride=1,padding=1),nn.ReLU(),nn.MaxPool2D(kernel_size=3,stride=2)))

self.layers.append(nn.Sequential(nn.Flatten(),nn.Linear(6400,4096),nn.ReLU()))

self.layers.append(nn.Sequential(nn.Linear(4096,4096),nn.ReLU()))

self.layers.append(nn.Sequential(nn.Linear(4096,2)))

def forward(self,x):

for layer in self.layers:

y=layer(x)

x=y return y

network=AlexNet()

paddle.summary(network,(16,3,224,224))#使用paddle API验证结构正确性---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-46 [[16, 3, 224, 224]] [16, 96, 54, 54] 34,944

ReLU-64 [[16, 96, 54, 54]] [16, 96, 54, 54] 0

Conv2D-47 [[16, 96, 54, 54]] [16, 256, 54, 54] 614,656

ReLU-65 [[16, 256, 54, 54]] [16, 256, 54, 54] 0

MaxPool2D-28 [[16, 256, 54, 54]] [16, 256, 26, 26] 0

Conv2D-48 [[16, 256, 26, 26]] [16, 384, 26, 26] 885,120

ReLU-66 [[16, 384, 26, 26]] [16, 384, 26, 26] 0

MaxPool2D-29 [[16, 384, 26, 26]] [16, 384, 12, 12] 0

Conv2D-49 [[16, 384, 12, 12]] [16, 384, 12, 12] 1,327,488

ReLU-67 [[16, 384, 12, 12]] [16, 384, 12, 12] 0

Conv2D-50 [[16, 384, 12, 12]] [16, 256, 12, 12] 884,992

ReLU-68 [[16, 256, 12, 12]] [16, 256, 12, 12] 0

MaxPool2D-30 [[16, 256, 12, 12]] [16, 256, 5, 5] 0

Flatten-17 [[16, 256, 5, 5]] [16, 6400] 0

Linear-28 [[16, 6400]] [16, 4096] 26,218,496

ReLU-69 [[16, 4096]] [16, 4096] 0

Linear-29 [[16, 4096]] [16, 4096] 16,781,312

ReLU-70 [[16, 4096]] [16, 4096] 0

Linear-30 [[16, 4096]] [16, 2] 8,194

===========================================================================

Total params: 46,755,202

Trainable params: 46,755,202

Non-trainable params: 0

---------------------------------------------------------------------------

Input size (MB): 9.19

Forward/backward pass size (MB): 367.91

Params size (MB): 178.36

Estimated Total Size (MB): 555.45

---------------------------------------------------------------------------{'total_params': 46755202, 'trainable_params': 46755202}在模型训练和验证部分,验证结果为

Predict begin...step 10/10 [==============================] - 5ms/step Predict samples: 10[1 1 0 1 0 0 0 1 0 1]

正确答案为1 1 0 1 0 0 0 1 0 1

VGG Net

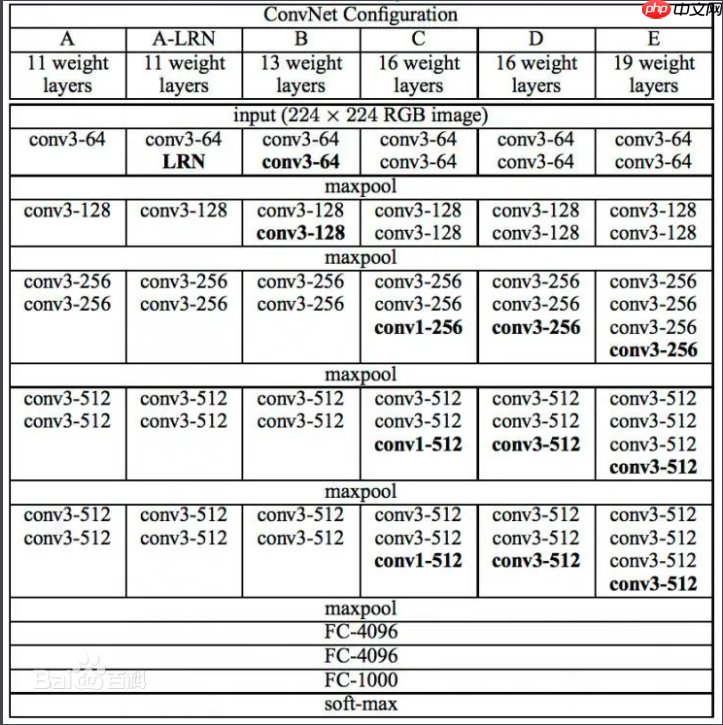

VGG网络是继AlexNet之后的另一个卷积网络,主要工作是使用了3* 3的卷积以及更深的网络,其基本结构还是Conv+Pooling,在论文 Very Deep Convolutional Networks for Large-Scale Image Recognition由Oxford Visual Geometry Group提出,常用的结构有VGG11、VGG13、VGG16、VGG19,是2014年ILSVRC竞赛的第二名,第一名是GoogLeNet,但是在很多的迁移学习中,VGG表现更优。模型结构图如下

#VGG Netclass VGG(nn.Layer):

def __init__(self,features,num_classes):

super().__init__()

self.features=features

self.cls=nn.Sequential(nn.Dropout(0.5),nn.Linear(7*7*512,4096),nn.ReLU(),nn.Linear(4096,4096),nn.ReLU(),nn.Linear(4096,num_classes))

def forward(self,x):

x=self.features(x)

x=self.cls(x) return xdef make_features(cfg):

layers=[]

in_c=3

for v in cfg: if v=="P":

layers.append(nn.MaxPool2D(2)) else:

layers.append(nn.Conv2D(in_c,v,kernel_size=3,stride=1,padding=1))

layers.append(nn.ReLU())

in_c=v

layers.append(nn.Flatten()) return nn.Sequential(*layers)

cfg={ 'VGG11': [64, 'P', 128, 'P', 256, 256, 'P', 512, 512, 'P', 512, 512, 'P'], 'VGG13': [64, 64, 'P', 128, 128, 'P', 256, 256, 'P', 512, 512, 'P', 512, 512, 'P'], 'VGG16': [64, 64, 'P', 128, 128, 'P', 256, 256, 256, 'P', 512, 512, 512, 'P', 512, 512, 512, 'P'], "VGG19": [64, 64, 'P', 128, 128, 'P', 256, 256, 256, 256, 'P', 512, 512, 512, 512, 'P', 512, 512, 512, 512, 'P']

}

features=make_features(cfg["VGG11"])

network=VGG(features,2)

paddle.summary(network,(16,3,224,224))#使用paddle API验证结构正确性----------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

============================================================================

Conv2D-51 [[16, 3, 224, 224]] [16, 64, 224, 224] 1,792

ReLU-71 [[16, 64, 224, 224]] [16, 64, 224, 224] 0

MaxPool2D-31 [[16, 64, 224, 224]] [16, 64, 112, 112] 0

Conv2D-52 [[16, 64, 112, 112]] [16, 128, 112, 112] 73,856

ReLU-72 [[16, 128, 112, 112]] [16, 128, 112, 112] 0

MaxPool2D-32 [[16, 128, 112, 112]] [16, 128, 56, 56] 0

Conv2D-53 [[16, 128, 56, 56]] [16, 256, 56, 56] 295,168

ReLU-73 [[16, 256, 56, 56]] [16, 256, 56, 56] 0

Conv2D-54 [[16, 256, 56, 56]] [16, 256, 56, 56] 590,080

ReLU-74 [[16, 256, 56, 56]] [16, 256, 56, 56] 0

MaxPool2D-33 [[16, 256, 56, 56]] [16, 256, 28, 28] 0

Conv2D-55 [[16, 256, 28, 28]] [16, 512, 28, 28] 1,180,160

ReLU-75 [[16, 512, 28, 28]] [16, 512, 28, 28] 0

Conv2D-56 [[16, 512, 28, 28]] [16, 512, 28, 28] 2,359,808

ReLU-76 [[16, 512, 28, 28]] [16, 512, 28, 28] 0

MaxPool2D-34 [[16, 512, 28, 28]] [16, 512, 14, 14] 0

Conv2D-57 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,359,808

ReLU-77 [[16, 512, 14, 14]] [16, 512, 14, 14] 0

Conv2D-58 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,359,808

ReLU-78 [[16, 512, 14, 14]] [16, 512, 14, 14] 0

MaxPool2D-35 [[16, 512, 14, 14]] [16, 512, 7, 7] 0

Flatten-19 [[16, 512, 7, 7]] [16, 25088] 0

Dropout-1 [[16, 25088]] [16, 25088] 0

Linear-31 [[16, 25088]] [16, 4096] 102,764,544

ReLU-79 [[16, 4096]] [16, 4096] 0

Linear-32 [[16, 4096]] [16, 4096] 16,781,312

ReLU-80 [[16, 4096]] [16, 4096] 0

Linear-33 [[16, 4096]] [16, 2] 8,194

============================================================================

Total params: 128,774,530

Trainable params: 128,774,530

Non-trainable params: 0

----------------------------------------------------------------------------

Input size (MB): 9.19

Forward/backward pass size (MB): 2007.94

Params size (MB): 491.24

Estimated Total Size (MB): 2508.36

----------------------------------------------------------------------------{'total_params': 128774530, 'trainable_params': 128774530}VGGNet11的训练和验证结果

Predict begin...step 10/10 [==============================] - 6ms/step Predict samples: 10[1 1 0 1 0 0 0 1 0 1]

ResNet

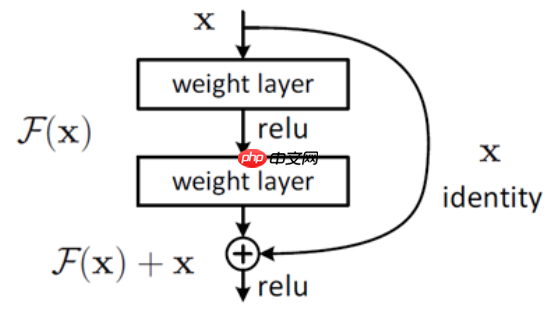

大名鼎鼎的残差网络ResNet,残差块的提出使更深的网络模型成为可能,残差块的结构如图所示, 增加了一个旁路连接,就使深层模型的训练成为可能。 ResNet模型的实现重点在于残差块的实现,这里单独定义一个残差块的类,在forward中

增加了一个旁路连接,就使深层模型的训练成为可能。 ResNet模型的实现重点在于残差块的实现,这里单独定义一个残差块的类,在forward中

def forward(self,x): copy_x=x x=self.conv(x) if self.in_c!=self.out_c: copy_x=self.w(copy_x) return x+copy_x

分为两条路计算,一条是经典的卷积层,一条是旁路连接,同时如果卷积前后特征图通道数不同,则在旁路中补充一个卷积以调整。

class Resblock(nn.Layer):

def __init__(self,in_c,out_c,pool=False):

super(Resblock,self).__init__()

self.in_c,self.out_c=in_c,out_c if pool==True:

self.conv=nn.Sequential(nn.Conv2D(in_c,out_c,3,stride=2,padding=1),nn.Conv2D(out_c,out_c,3,padding=1),nn.BatchNorm(out_c))

self.w=nn.Conv2D(in_c,out_c,kernel_size=1,stride=2) else:

self.conv=nn.Sequential(nn.Conv2D(in_c,out_c,3,padding=1),nn.Conv2D(out_c,out_c,3,padding=1),nn.BatchNorm(out_c))

self.w=nn.Conv2D(in_c,out_c,kernel_size=1)

def forward(self,x):

copy_x=x

x=self.conv(x) if self.in_c!=self.out_c:

copy_x=self.w(copy_x) return x+copy_x

class ResNet(nn.Layer):

def __init__(self):

super().__init__()

self.layers=nn.LayerList()

self.layers.append(nn.Sequential(nn.Conv2D(in_channels=3,out_channels=64,kernel_size=7,stride=2,padding=1)))#,nn.MaxPool2D(2)

for ind in range(3):

self.layers.append(Resblock(64,64))

self.layers.append(Resblock(64,128,True)) for ind in range(3):

self.layers.append(Resblock(128,128))

self.layers.append(Resblock(128,256,True)) for ind in range(5):

self.layers.append(Resblock(256,256))

self.layers.append(Resblock(256,512,True)) for ind in range(2):

self.layers.append(Resblock(512,512))

self.layers.append(nn.Sequential(nn.Flatten(),nn.Linear(100352,2)))

def forward(self,x):

for layer in self.layers:

x=layer(x) return x

network=ResNet()

paddle.summary(network,(16,3,224,224))#使用paddle API验证结构正确性--------------------------------------------------------------------------- Layer (type) Input Shape Output Shape Param # =========================================================================== Conv2D-59 [[16, 3, 224, 224]] [16, 64, 110, 110] 9,472 Conv2D-60 [[16, 64, 110, 110]] [16, 64, 110, 110] 36,928 Conv2D-61 [[16, 64, 110, 110]] [16, 64, 110, 110] 36,928 BatchNorm-1 [[16, 64, 110, 110]] [16, 64, 110, 110] 256 Resblock-1 [[16, 64, 110, 110]] [16, 64, 110, 110] 0 Conv2D-63 [[16, 64, 110, 110]] [16, 64, 110, 110] 36,928 Conv2D-64 [[16, 64, 110, 110]] [16, 64, 110, 110] 36,928 BatchNorm-2 [[16, 64, 110, 110]] [16, 64, 110, 110] 256 Resblock-2 [[16, 64, 110, 110]] [16, 64, 110, 110] 0 Conv2D-66 [[16, 64, 110, 110]] [16, 64, 110, 110] 36,928 Conv2D-67 [[16, 64, 110, 110]] [16, 64, 110, 110] 36,928 BatchNorm-3 [[16, 64, 110, 110]] [16, 64, 110, 110] 256 Resblock-3 [[16, 64, 110, 110]] [16, 64, 110, 110] 0 Conv2D-69 [[16, 64, 110, 110]] [16, 128, 55, 55] 73,856 Conv2D-70 [[16, 128, 55, 55]] [16, 128, 55, 55] 147,584 BatchNorm-4 [[16, 128, 55, 55]] [16, 128, 55, 55] 512 Conv2D-71 [[16, 64, 110, 110]] [16, 128, 55, 55] 8,320 Resblock-4 [[16, 64, 110, 110]] [16, 128, 55, 55] 0 Conv2D-72 [[16, 128, 55, 55]] [16, 128, 55, 55] 147,584 Conv2D-73 [[16, 128, 55, 55]] [16, 128, 55, 55] 147,584 BatchNorm-5 [[16, 128, 55, 55]] [16, 128, 55, 55] 512 Resblock-5 [[16, 128, 55, 55]] [16, 128, 55, 55] 0 Conv2D-75 [[16, 128, 55, 55]] [16, 128, 55, 55] 147,584 Conv2D-76 [[16, 128, 55, 55]] [16, 128, 55, 55] 147,584 BatchNorm-6 [[16, 128, 55, 55]] [16, 128, 55, 55] 512 Resblock-6 [[16, 128, 55, 55]] [16, 128, 55, 55] 0 Conv2D-78 [[16, 128, 55, 55]] [16, 128, 55, 55] 147,584 Conv2D-79 [[16, 128, 55, 55]] [16, 128, 55, 55] 147,584 BatchNorm-7 [[16, 128, 55, 55]] [16, 128, 55, 55] 512 Resblock-7 [[16, 128, 55, 55]] [16, 128, 55, 55] 0 Conv2D-81 [[16, 128, 55, 55]] [16, 256, 28, 28] 295,168 Conv2D-82 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 BatchNorm-8 [[16, 256, 28, 28]] [16, 256, 28, 28] 1,024 Conv2D-83 [[16, 128, 55, 55]] [16, 256, 28, 28] 33,024 Resblock-8 [[16, 128, 55, 55]] [16, 256, 28, 28] 0 Conv2D-84 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 Conv2D-85 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 BatchNorm-9 [[16, 256, 28, 28]] [16, 256, 28, 28] 1,024 Resblock-9 [[16, 256, 28, 28]] [16, 256, 28, 28] 0 Conv2D-87 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 Conv2D-88 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 BatchNorm-10 [[16, 256, 28, 28]] [16, 256, 28, 28] 1,024 Resblock-10 [[16, 256, 28, 28]] [16, 256, 28, 28] 0 Conv2D-90 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 Conv2D-91 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 BatchNorm-11 [[16, 256, 28, 28]] [16, 256, 28, 28] 1,024 Resblock-11 [[16, 256, 28, 28]] [16, 256, 28, 28] 0 Conv2D-93 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 Conv2D-94 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 BatchNorm-12 [[16, 256, 28, 28]] [16, 256, 28, 28] 1,024 Resblock-12 [[16, 256, 28, 28]] [16, 256, 28, 28] 0 Conv2D-96 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 Conv2D-97 [[16, 256, 28, 28]] [16, 256, 28, 28] 590,080 BatchNorm-13 [[16, 256, 28, 28]] [16, 256, 28, 28] 1,024 Resblock-13 [[16, 256, 28, 28]] [16, 256, 28, 28] 0 Conv2D-99 [[16, 256, 28, 28]] [16, 512, 14, 14] 1,180,160 Conv2D-100 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,359,808 BatchNorm-14 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,048 Conv2D-101 [[16, 256, 28, 28]] [16, 512, 14, 14] 131,584 Resblock-14 [[16, 256, 28, 28]] [16, 512, 14, 14] 0 Conv2D-102 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,359,808 Conv2D-103 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,359,808 BatchNorm-15 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,048 Resblock-15 [[16, 512, 14, 14]] [16, 512, 14, 14] 0 Conv2D-105 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,359,808 Conv2D-106 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,359,808 BatchNorm-16 [[16, 512, 14, 14]] [16, 512, 14, 14] 2,048 Resblock-16 [[16, 512, 14, 14]] [16, 512, 14, 14] 0 Flatten-21 [[16, 512, 14, 14]] [16, 100352] 0 Linear-34 [[16, 100352]] [16, 2] 200,706 =========================================================================== Total params: 21,491,970 Trainable params: 21,476,866 Non-trainable params: 15,104 --------------------------------------------------------------------------- Input size (MB): 9.19 Forward/backward pass size (MB): 2816.42 Params size (MB): 81.99 Estimated Total Size (MB): 2907.59 ---------------------------------------------------------------------------

{'total_params': 21491970, 'trainable_params': 21476866}在模型训练和验证部分,验证结果如下

Predict begin...step 10/10 [==============================] - 13ms/step Predict samples: 10[1 1 0 1 0 0 0 1 0 1]

DenseNet

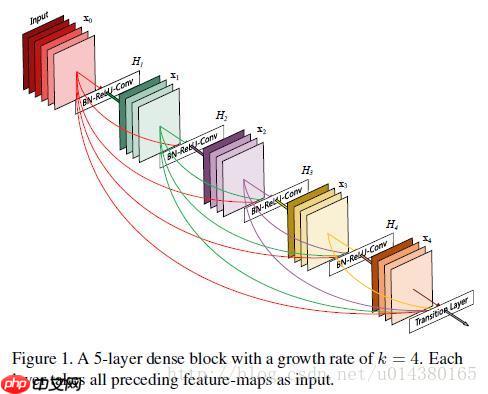

DenseNet于2017被提出,作者没有根据业内的潮流对网络进行deeper/wider结构的改造,是对feature特征入手,在网络连通的前提下,对所有特征层进行了连接,在核心组件DenseBlock中,这一层的输入是之前所有层的输出,如图所示

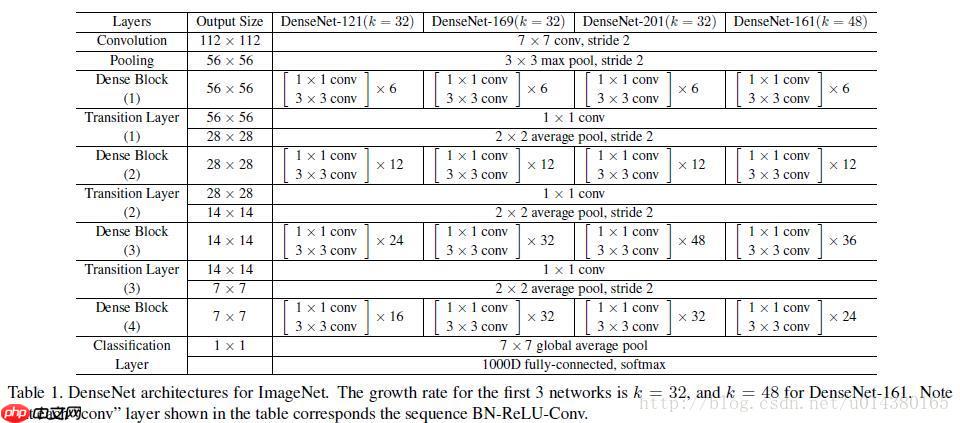

在此基础上,作者构造了DenseNet,其结构如下

#DenseNetclass DenseLayer(nn.Layer):

def __init__(self,in_c,growth_rate,bn_size):

super().__init__()

out_c = growth_rate * bn_size

self.layers=nn.Sequential(nn.BatchNorm2D(in_c),nn.ReLU(),nn.Conv2D(in_c,out_c,1),nn.BatchNorm2D(out_c),nn.ReLU(),nn.Conv2D(out_c,growth_rate,3,padding=1)) def forward(self,x):

y = self.layers(x) return yclass DenseBlock(nn.Layer):

def __init__(self,num_layers,in_c,growth_rate,bn_size):

super().__init__()

self.layers=nn.LayerList() for ind in range(num_layers):

self.layers.append(

DenseLayer(in_c=in_c+ind*growth_rate,

growth_rate=growth_rate,

bn_size=bn_size)

)

def forward(self,x):

features=[x] for layer in self.layers:

new_x=layer(paddle.concat(features,axis=1))

features.append(new_x) return paddle.concat(features,axis=1)class Transition(nn.Layer):

def __init__(self,in_c,out_c):

super().__init__()

self.layers=nn.Sequential(nn.BatchNorm2D(in_c),nn.ReLU(),nn.Conv2D(in_c,out_c,1),nn.AvgPool2D(2,2))

def forward(self,x):

return self.layers(x)class DenseNet(nn.Layer):

def __init__(self,num_classes,growth_rate=32,block=(6,12,24,16),bn_size=4,out_c=64):

super().__init__()

self.conv_pool=nn.Sequential(nn.Conv2D(3,out_c,7,stride=2,padding=3),nn.MaxPool2D(3,2))

self.blocks=nn.LayerList()

in_c=out_c for ind,n in enumerate(block):

self.blocks.append(DenseBlock(n,in_c,growth_rate,bn_size))

in_c+=growth_rate*n if ind!=len(block)-1:

self.blocks.append(Transition(in_c,in_c//2))

in_c//=2

self.blocks.append(nn.Sequential(nn.BatchNorm2D(in_c),nn.ReLU(),nn.AdaptiveAvgPool2D((1,1)),nn.Flatten()))

self.cls=nn.Linear(in_c,num_classes)

def forward(self,x):

x=self.conv_pool(x) for layer in self.blocks:

x=layer(x)

x=self.cls(x) return xdef _DenseNet(arch, block_cfg, batch_norm, pretrained, **kwargs):

model = DenseNet(block=block_cfg,**kwargs) if pretrained: assert arch in model_urls, "{} model do not have a pretrained model now, you should set pretrained=False".format(

arch)

weight_path = get_weights_path_from_url(model_urls[arch][0],

model_urls[arch][1])

param = paddle.load(weight_path)

model.load_dict(param) return modeldef DenseNet121(pretrained=False, batch_norm=False, **kwargs):

model_name = 'DenseNet121'

if batch_norm:

model_name += ('_bn') return _DenseNet(model_name, (6,12,24,16), batch_norm, pretrained, **kwargs)def DenseNet161(pretrained=False, batch_norm=False, **kwargs):

model_name = 'DenseNet161'

if batch_norm:

model_name += ('_bn') return _DenseNet(model_name, (6,12,32,32), batch_norm, pretrained, **kwargs)def DenseNet169(pretrained=False, batch_norm=False, **kwargs):

model_name = 'DenseNet169'

if batch_norm:

model_name += ('_bn') return _DenseNet(model_name, (6,12,48,32), batch_norm, pretrained, **kwargs)def DenseNet201(pretrained=False, batch_norm=False, **kwargs):

model_name = 'DenseNet201'

if batch_norm:

model_name += ('_bn') return _DenseNet(model_name, (6,12,64,48), batch_norm, pretrained, **kwargs)

network=DenseNet(2)

paddle.summary(network,(16,3,224,224))#使用paddle API验证结构正确性-------------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===============================================================================

Conv2D-108 [[16, 3, 224, 224]] [16, 64, 112, 112] 9,472

MaxPool2D-36 [[16, 64, 112, 112]] [16, 64, 55, 55] 0

BatchNorm2D-1 [[16, 64, 55, 55]] [16, 64, 55, 55] 256

ReLU-81 [[16, 64, 55, 55]] [16, 64, 55, 55] 0

Conv2D-109 [[16, 64, 55, 55]] [16, 128, 55, 55] 8,320

BatchNorm2D-2 [[16, 128, 55, 55]] [16, 128, 55, 55] 512

ReLU-82 [[16, 128, 55, 55]] [16, 128, 55, 55] 0

Conv2D-110 [[16, 128, 55, 55]] [16, 32, 55, 55] 36,896

DenseLayer-1 [[16, 64, 55, 55]] [16, 32, 55, 55] 0

BatchNorm2D-3 [[16, 96, 55, 55]] [16, 96, 55, 55] 384

ReLU-83 [[16, 96, 55, 55]] [16, 96, 55, 55] 0

Conv2D-111 [[16, 96, 55, 55]] [16, 128, 55, 55] 12,416

BatchNorm2D-4 [[16, 128, 55, 55]] [16, 128, 55, 55] 512

ReLU-84 [[16, 128, 55, 55]] [16, 128, 55, 55] 0

Conv2D-112 [[16, 128, 55, 55]] [16, 32, 55, 55] 36,896

DenseLayer-2 [[16, 96, 55, 55]] [16, 32, 55, 55] 0

BatchNorm2D-5 [[16, 128, 55, 55]] [16, 128, 55, 55] 512

ReLU-85 [[16, 128, 55, 55]] [16, 128, 55, 55] 0

Conv2D-113 [[16, 128, 55, 55]] [16, 128, 55, 55] 16,512

BatchNorm2D-6 [[16, 128, 55, 55]] [16, 128, 55, 55] 512

ReLU-86 [[16, 128, 55, 55]] [16, 128, 55, 55] 0

Conv2D-114 [[16, 128, 55, 55]] [16, 32, 55, 55] 36,896

DenseLayer-3 [[16, 128, 55, 55]] [16, 32, 55, 55] 0

BatchNorm2D-7 [[16, 160, 55, 55]] [16, 160, 55, 55] 640

ReLU-87 [[16, 160, 55, 55]] [16, 160, 55, 55] 0

Conv2D-115 [[16, 160, 55, 55]] [16, 128, 55, 55] 20,608

BatchNorm2D-8 [[16, 128, 55, 55]] [16, 128, 55, 55] 512

ReLU-88 [[16, 128, 55, 55]] [16, 128, 55, 55] 0

Conv2D-116 [[16, 128, 55, 55]] [16, 32, 55, 55] 36,896

DenseLayer-4 [[16, 160, 55, 55]] [16, 32, 55, 55] 0

BatchNorm2D-9 [[16, 192, 55, 55]] [16, 192, 55, 55] 768

ReLU-89 [[16, 192, 55, 55]] [16, 192, 55, 55] 0

Conv2D-117 [[16, 192, 55, 55]] [16, 128, 55, 55] 24,704

BatchNorm2D-10 [[16, 128, 55, 55]] [16, 128, 55, 55] 512

ReLU-90 [[16, 128, 55, 55]] [16, 128, 55, 55] 0

Conv2D-118 [[16, 128, 55, 55]] [16, 32, 55, 55] 36,896

DenseLayer-5 [[16, 192, 55, 55]] [16, 32, 55, 55] 0

BatchNorm2D-11 [[16, 224, 55, 55]] [16, 224, 55, 55] 896

ReLU-91 [[16, 224, 55, 55]] [16, 224, 55, 55] 0

Conv2D-119 [[16, 224, 55, 55]] [16, 128, 55, 55] 28,800

BatchNorm2D-12 [[16, 128, 55, 55]] [16, 128, 55, 55] 512

ReLU-92 [[16, 128, 55, 55]] [16, 128, 55, 55] 0

Conv2D-120 [[16, 128, 55, 55]] [16, 32, 55, 55] 36,896

DenseLayer-6 [[16, 224, 55, 55]] [16, 32, 55, 55] 0

DenseBlock-1 [[16, 64, 55, 55]] [16, 256, 55, 55] 0

BatchNorm2D-13 [[16, 256, 55, 55]] [16, 256, 55, 55] 1,024

ReLU-93 [[16, 256, 55, 55]] [16, 256, 55, 55] 0

Conv2D-121 [[16, 256, 55, 55]] [16, 128, 55, 55] 32,896

AvgPool2D-1 [[16, 128, 55, 55]] [16, 128, 27, 27] 0

Transition-1 [[16, 256, 55, 55]] [16, 128, 27, 27] 0

BatchNorm2D-14 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-94 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-122 [[16, 128, 27, 27]] [16, 128, 27, 27] 16,512

BatchNorm2D-15 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-95 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-123 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-7 [[16, 128, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-16 [[16, 160, 27, 27]] [16, 160, 27, 27] 640

ReLU-96 [[16, 160, 27, 27]] [16, 160, 27, 27] 0

Conv2D-124124 [[16, 160, 27, 27]] [16, 128, 27, 27] 20,608

BatchNorm2D-17 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-97 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-125 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-8 [[16, 160, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-18 [[16, 192, 27, 27]] [16, 192, 27, 27] 768

ReLU-98 [[16, 192, 27, 27]] [16, 192, 27, 27] 0

Conv2D-126 [[16, 192, 27, 27]] [16, 128, 27, 27] 24,704

BatchNorm2D-19 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-99 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-127 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-9 [[16, 192, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-20 [[16, 224, 27, 27]] [16, 224, 27, 27] 896

ReLU-100 [[16, 224, 27, 27]] [16, 224, 27, 27] 0

Conv2D-128 [[16, 224, 27, 27]] [16, 128, 27, 27] 28,800

BatchNorm2D-21 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-101 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-129 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-10 [[16, 224, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-22 [[16, 256, 27, 27]] [16, 256, 27, 27] 1,024

ReLU-102 [[16, 256, 27, 27]] [16, 256, 27, 27] 0

Conv2D-130 [[16, 256, 27, 27]] [16, 128, 27, 27] 32,896

BatchNorm2D-23 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-103 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-131 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-11 [[16, 256, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-24 [[16, 288, 27, 27]] [16, 288, 27, 27] 1,152

ReLU-104 [[16, 288, 27, 27]] [16, 288, 27, 27] 0

Conv2D-132 [[16, 288, 27, 27]] [16, 128, 27, 27] 36,992

BatchNorm2D-25 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-105 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-133 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-12 [[16, 288, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-26 [[16, 320, 27, 27]] [16, 320, 27, 27] 1,280

ReLU-106 [[16, 320, 27, 27]] [16, 320, 27, 27] 0

Conv2D-134 [[16, 320, 27, 27]] [16, 128, 27, 27] 41,088

BatchNorm2D-27 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-107 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-135 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-13 [[16, 320, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-28 [[16, 352, 27, 27]] [16, 352, 27, 27] 1,408

ReLU-108 [[16, 352, 27, 27]] [16, 352, 27, 27] 0

Conv2D-136 [[16, 352, 27, 27]] [16, 128, 27, 27] 45,184

BatchNorm2D-29 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-109 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-137 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-14 [[16, 352, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-30 [[16, 384, 27, 27]] [16, 384, 27, 27] 1,536

ReLU-110 [[16, 384, 27, 27]] [16, 384, 27, 27] 0

Conv2D-138 [[16, 384, 27, 27]] [16, 128, 27, 27] 49,280

BatchNorm2D-31 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-111 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-139 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-15 [[16, 384, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-32 [[16, 416, 27, 27]] [16, 416, 27, 27] 1,664

ReLU-112 [[16, 416, 27, 27]] [16, 416, 27, 27] 0

Conv2D-140 [[16, 416, 27, 27]] [16, 128, 27, 27] 53,376

BatchNorm2D-33 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-113 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-141 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-16 [[16, 416, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-34 [[16, 448, 27, 27]] [16, 448, 27, 27] 1,792

ReLU-114 [[16, 448, 27, 27]] [16, 448, 27, 27] 0

Conv2D-142 [[16, 448, 27, 27]] [16, 128, 27, 27] 57,472

BatchNorm2D-35 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-115 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-143 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-17 [[16, 448, 27, 27]] [16, 32, 27, 27] 0

BatchNorm2D-36 [[16, 480, 27, 27]] [16, 480, 27, 27] 1,920

ReLU-116 [[16, 480, 27, 27]] [16, 480, 27, 27] 0

Conv2D-144 [[16, 480, 27, 27]] [16, 128, 27, 27] 61,568

BatchNorm2D-37 [[16, 128, 27, 27]] [16, 128, 27, 27] 512

ReLU-117 [[16, 128, 27, 27]] [16, 128, 27, 27] 0

Conv2D-145 [[16, 128, 27, 27]] [16, 32, 27, 27] 36,896

DenseLayer-18 [[16, 480, 27, 27]] [16, 32, 27, 27] 0

DenseBlock-2 [[16, 128, 27, 27]] [16, 512, 27, 27] 0

BatchNorm2D-38 [[16, 512, 27, 27]] [16, 512, 27, 27] 2,048

ReLU-118 [[16, 512, 27, 27]] [16, 512, 27, 27] 0

Conv2D-146 [[16, 512, 27, 27]] [16, 256, 27, 27] 131,328

AvgPool2D-2 [[16, 256, 27, 27]] [16, 256, 13, 13] 0

Transition-2 [[16, 512, 27, 27]] [16, 256, 13, 13] 0

BatchNorm2D-39 [[16, 256, 13, 13]] [16, 256, 13, 13] 1,024

ReLU-119 [[16, 256, 13, 13]] [16, 256, 13, 13] 0

Conv2D-147 [[16, 256, 13, 13]] [16, 128, 13, 13] 32,896

BatchNorm2D-40 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-120 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-148 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-19 [[16, 256, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-41 [[16, 288, 13, 13]] [16, 288, 13, 13] 1,152

ReLU-121 [[16, 288, 13, 13]] [16, 288, 13, 13] 0

Conv2D-149 [[16, 288, 13, 13]] [16, 128, 13, 13] 36,992

BatchNorm2D-42 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-122 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-150 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-20 [[16, 288, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-43 [[16, 320, 13, 13]] [16, 320, 13, 13] 1,280

ReLU-123 [[16, 320, 13, 13]] [16, 320, 13, 13] 0

Conv2D-151 [[16, 320, 13, 13]] [16, 128, 13, 13] 41,088

BatchNorm2D-44 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-124124 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-152 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-21 [[16, 320, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-45 [[16, 352, 13, 13]] [16, 352, 13, 13] 1,408

ReLU-125 [[16, 352, 13, 13]] [16, 352, 13, 13] 0

Conv2D-153 [[16, 352, 13, 13]] [16, 128, 13, 13] 45,184

BatchNorm2D-46 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-126 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-154 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-22 [[16, 352, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-47 [[16, 384, 13, 13]] [16, 384, 13, 13] 1,536

ReLU-127 [[16, 384, 13, 13]] [16, 384, 13, 13] 0

Conv2D-155 [[16, 384, 13, 13]] [16, 128, 13, 13] 49,280

BatchNorm2D-48 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-128 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-156 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-23 [[16, 384, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-49 [[16, 416, 13, 13]] [16, 416, 13, 13] 1,664

ReLU-129 [[16, 416, 13, 13]] [16, 416, 13, 13] 0

Conv2D-157 [[16, 416, 13, 13]] [16, 128, 13, 13] 53,376

BatchNorm2D-50 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-130 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-158 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-24 [[16, 416, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-51 [[16, 448, 13, 13]] [16, 448, 13, 13] 1,792

ReLU-131 [[16, 448, 13, 13]] [16, 448, 13, 13] 0

Conv2D-159 [[16, 448, 13, 13]] [16, 128, 13, 13] 57,472

BatchNorm2D-52 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-132 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-160 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-25 [[16, 448, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-53 [[16, 480, 13, 13]] [16, 480, 13, 13] 1,920

ReLU-133 [[16, 480, 13, 13]] [16, 480, 13, 13] 0

Conv2D-161 [[16, 480, 13, 13]] [16, 128, 13, 13] 61,568

BatchNorm2D-54 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-134 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-162 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-26 [[16, 480, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-55 [[16, 512, 13, 13]] [16, 512, 13, 13] 2,048

ReLU-135 [[16, 512, 13, 13]] [16, 512, 13, 13] 0

Conv2D-163 [[16, 512, 13, 13]] [16, 128, 13, 13] 65,664

BatchNorm2D-56 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-136 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-164 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-27 [[16, 512, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-57 [[16, 544, 13, 13]] [16, 544, 13, 13] 2,176

ReLU-137 [[16, 544, 13, 13]] [16, 544, 13, 13] 0

Conv2D-165 [[16, 544, 13, 13]] [16, 128, 13, 13] 69,760

BatchNorm2D-58 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-138 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-166 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-28 [[16, 544, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-59 [[16, 576, 13, 13]] [16, 576, 13, 13] 2,304

ReLU-139 [[16, 576, 13, 13]] [16, 576, 13, 13] 0

Conv2D-167 [[16, 576, 13, 13]] [16, 128, 13, 13] 73,856

BatchNorm2D-60 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-140 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-168 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-29 [[16, 576, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-61 [[16, 608, 13, 13]] [16, 608, 13, 13] 2,432

ReLU-141 [[16, 608, 13, 13]] [16, 608, 13, 13] 0

Conv2D-169 [[16, 608, 13, 13]] [16, 128, 13, 13] 77,952

BatchNorm2D-62 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-142 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-170 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-30 [[16, 608, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-63 [[16, 640, 13, 13]] [16, 640, 13, 13] 2,560

ReLU-143 [[16, 640, 13, 13]] [16, 640, 13, 13] 0

Conv2D-171 [[16, 640, 13, 13]] [16, 128, 13, 13] 82,048

BatchNorm2D-64 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-144 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-172 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-31 [[16, 640, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-65 [[16, 672, 13, 13]] [16, 672, 13, 13] 2,688

ReLU-145 [[16, 672, 13, 13]] [16, 672, 13, 13] 0

Conv2D-173 [[16, 672, 13, 13]] [16, 128, 13, 13] 86,144

BatchNorm2D-66 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-146 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-174 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-32 [[16, 672, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-67 [[16, 704, 13, 13]] [16, 704, 13, 13] 2,816

ReLU-147 [[16, 704, 13, 13]] [16, 704, 13, 13] 0

Conv2D-175 [[16, 704, 13, 13]] [16, 128, 13, 13] 90,240

BatchNorm2D-68 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-148 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-176 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-33 [[16, 704, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-69 [[16, 736, 13, 13]] [16, 736, 13, 13] 2,944

ReLU-149 [[16, 736, 13, 13]] [16, 736, 13, 13] 0

Conv2D-177 [[16, 736, 13, 13]] [16, 128, 13, 13] 94,336

BatchNorm2D-70 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-150 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-178 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-34 [[16, 736, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-71 [[16, 768, 13, 13]] [16, 768, 13, 13] 3,072

ReLU-151 [[16, 768, 13, 13]] [16, 768, 13, 13] 0

Conv2D-179 [[16, 768, 13, 13]] [16, 128, 13, 13] 98,432

BatchNorm2D-72 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-152 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-180 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-35 [[16, 768, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-73 [[16, 800, 13, 13]] [16, 800, 13, 13] 3,200

ReLU-153 [[16, 800, 13, 13]] [16, 800, 13, 13] 0

Conv2D-181 [[16, 800, 13, 13]] [16, 128, 13, 13] 102,528

BatchNorm2D-74 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-154 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-182 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-36 [[16, 800, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-75 [[16, 832, 13, 13]] [16, 832, 13, 13] 3,328

ReLU-155 [[16, 832, 13, 13]] [16, 832, 13, 13] 0

Conv2D-183 [[16, 832, 13, 13]] [16, 128, 13, 13] 106,624

BatchNorm2D-76 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-156 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-184 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-37 [[16, 832, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-77 [[16, 864, 13, 13]] [16, 864, 13, 13] 3,456

ReLU-157 [[16, 864, 13, 13]] [16, 864, 13, 13] 0

Conv2D-185 [[16, 864, 13, 13]] [16, 128, 13, 13] 110,720

BatchNorm2D-78 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-158 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-186 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-38 [[16, 864, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-79 [[16, 896, 13, 13]] [16, 896, 13, 13] 3,584

ReLU-159 [[16, 896, 13, 13]] [16, 896, 13, 13] 0

Conv2D-187 [[16, 896, 13, 13]] [16, 128, 13, 13] 114,816

BatchNorm2D-80 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-160 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-188 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-39 [[16, 896, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-81 [[16, 928, 13, 13]] [16, 928, 13, 13] 3,712

ReLU-161 [[16, 928, 13, 13]] [16, 928, 13, 13] 0

Conv2D-189 [[16, 928, 13, 13]] [16, 128, 13, 13] 118,912

BatchNorm2D-82 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-162 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-190 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-40 [[16, 928, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-83 [[16, 960, 13, 13]] [16, 960, 13, 13] 3,840

ReLU-163 [[16, 960, 13, 13]] [16, 960, 13, 13] 0

Conv2D-191 [[16, 960, 13, 13]] [16, 128, 13, 13] 123,008

BatchNorm2D-84 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-164 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-192 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-41 [[16, 960, 13, 13]] [16, 32, 13, 13] 0

BatchNorm2D-85 [[16, 992, 13, 13]] [16, 992, 13, 13] 3,968

ReLU-165 [[16, 992, 13, 13]] [16, 992, 13, 13] 0

Conv2D-193 [[16, 992, 13, 13]] [16, 128, 13, 13] 127,104

BatchNorm2D-86 [[16, 128, 13, 13]] [16, 128, 13, 13] 512

ReLU-166 [[16, 128, 13, 13]] [16, 128, 13, 13] 0

Conv2D-194 [[16, 128, 13, 13]] [16, 32, 13, 13] 36,896

DenseLayer-42 [[16, 992, 13, 13]] [16, 32, 13, 13] 0

DenseBlock-3 [[16, 256, 13, 13]] [16, 1024, 13, 13] 0

BatchNorm2D-87 [[16, 1024, 13, 13]] [16, 1024, 13, 13] 4,096

ReLU-167 [[16, 1024, 13, 13]] [16, 1024, 13, 13] 0

Conv2D-195 [[16, 1024, 13, 13]] [16, 512, 13, 13] 524,800

AvgPool2D-3 [[16, 512, 13, 13]] [16, 512, 6, 6] 0

Transition-3 [[16, 1024, 13, 13]] [16, 512, 6, 6] 0

BatchNorm2D-88 [[16, 512, 6, 6]] [16, 512, 6, 6] 2,048

ReLU-168 [[16, 512, 6, 6]] [16, 512, 6, 6] 0

Conv2D-196 [[16, 512, 6, 6]] [16, 128, 6, 6] 65,664

BatchNorm2D-89 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-169 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-197 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-43 [[16, 512, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-90 [[16, 544, 6, 6]] [16, 544, 6, 6] 2,176

ReLU-170 [[16, 544, 6, 6]] [16, 544, 6, 6] 0

Conv2D-198 [[16, 544, 6, 6]] [16, 128, 6, 6] 69,760

BatchNorm2D-91 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-171 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-199 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-44 [[16, 544, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-92 [[16, 576, 6, 6]] [16, 576, 6, 6] 2,304

ReLU-172 [[16, 576, 6, 6]] [16, 576, 6, 6] 0

Conv2D-200 [[16, 576, 6, 6]] [16, 128, 6, 6] 73,856

BatchNorm2D-93 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-173 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-201 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-45 [[16, 576, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-94 [[16, 608, 6, 6]] [16, 608, 6, 6] 2,432

ReLU-174 [[16, 608, 6, 6]] [16, 608, 6, 6] 0

Conv2D-202 [[16, 608, 6, 6]] [16, 128, 6, 6] 77,952

BatchNorm2D-95 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-175 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-203 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-46 [[16, 608, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-96 [[16, 640, 6, 6]] [16, 640, 6, 6] 2,560

ReLU-176 [[16, 640, 6, 6]] [16, 640, 6, 6] 0

Conv2D-204 [[16, 640, 6, 6]] [16, 128, 6, 6] 82,048

BatchNorm2D-97 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-177 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-205 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-47 [[16, 640, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-98 [[16, 672, 6, 6]] [16, 672, 6, 6] 2,688

ReLU-178 [[16, 672, 6, 6]] [16, 672, 6, 6] 0

Conv2D-206 [[16, 672, 6, 6]] [16, 128, 6, 6] 86,144

BatchNorm2D-99 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-179 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-207 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-48 [[16, 672, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-100 [[16, 704, 6, 6]] [16, 704, 6, 6] 2,816

ReLU-180 [[16, 704, 6, 6]] [16, 704, 6, 6] 0

Conv2D-208 [[16, 704, 6, 6]] [16, 128, 6, 6] 90,240

BatchNorm2D-101 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-181 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-209 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-49 [[16, 704, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-102 [[16, 736, 6, 6]] [16, 736, 6, 6] 2,944

ReLU-182 [[16, 736, 6, 6]] [16, 736, 6, 6] 0

Conv2D-210 [[16, 736, 6, 6]] [16, 128, 6, 6] 94,336

BatchNorm2D-103 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-183 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-211 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-50 [[16, 736, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-104 [[16, 768, 6, 6]] [16, 768, 6, 6] 3,072

ReLU-184 [[16, 768, 6, 6]] [16, 768, 6, 6] 0

Conv2D-212 [[16, 768, 6, 6]] [16, 128, 6, 6] 98,432

BatchNorm2D-105 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-185 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-213 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-51 [[16, 768, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-106 [[16, 800, 6, 6]] [16, 800, 6, 6] 3,200

ReLU-186 [[16, 800, 6, 6]] [16, 800, 6, 6] 0

Conv2D-214 [[16, 800, 6, 6]] [16, 128, 6, 6] 102,528

BatchNorm2D-107 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-187 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-215 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-52 [[16, 800, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-108 [[16, 832, 6, 6]] [16, 832, 6, 6] 3,328

ReLU-188 [[16, 832, 6, 6]] [16, 832, 6, 6] 0

Conv2D-216 [[16, 832, 6, 6]] [16, 128, 6, 6] 106,624

BatchNorm2D-109 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-189 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-217 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-53 [[16, 832, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-110 [[16, 864, 6, 6]] [16, 864, 6, 6] 3,456

ReLU-190 [[16, 864, 6, 6]] [16, 864, 6, 6] 0

Conv2D-218 [[16, 864, 6, 6]] [16, 128, 6, 6] 110,720

BatchNorm2D-111 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-191 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-219 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-54 [[16, 864, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-112 [[16, 896, 6, 6]] [16, 896, 6, 6] 3,584

ReLU-192 [[16, 896, 6, 6]] [16, 896, 6, 6] 0

Conv2D-220 [[16, 896, 6, 6]] [16, 128, 6, 6] 114,816

BatchNorm2D-113 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-193 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-221 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-55 [[16, 896, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-114 [[16, 928, 6, 6]] [16, 928, 6, 6] 3,712

ReLU-194 [[16, 928, 6, 6]] [16, 928, 6, 6] 0

Conv2D-222 [[16, 928, 6, 6]] [16, 128, 6, 6] 118,912

BatchNorm2D-115 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-195 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-223 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-56 [[16, 928, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-116 [[16, 960, 6, 6]] [16, 960, 6, 6] 3,840

ReLU-196 [[16, 960, 6, 6]] [16, 960, 6, 6] 0

Conv2D-224 [[16, 960, 6, 6]] [16, 128, 6, 6] 123,008

BatchNorm2D-117 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-197 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-225 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-57 [[16, 960, 6, 6]] [16, 32, 6, 6] 0

BatchNorm2D-118 [[16, 992, 6, 6]] [16, 992, 6, 6] 3,968

ReLU-198 [[16, 992, 6, 6]] [16, 992, 6, 6] 0

Conv2D-226 [[16, 992, 6, 6]] [16, 128, 6, 6] 127,104

BatchNorm2D-119 [[16, 128, 6, 6]] [16, 128, 6, 6] 512

ReLU-199 [[16, 128, 6, 6]] [16, 128, 6, 6] 0

Conv2D-227 [[16, 128, 6, 6]] [16, 32, 6, 6] 36,896

DenseLayer-58 [[16, 992, 6, 6]] [16, 32, 6, 6] 0

DenseBlock-4 [[16, 512, 6, 6]] [16, 1024, 6, 6] 0

BatchNorm2D-120 [[16, 1024, 6, 6]] [16, 1024, 6, 6] 4,096

ReLU-200 [[16, 1024, 6, 6]] [16, 1024, 6, 6] 0

AdaptiveAvgPool2D-1 [[16, 1024, 6, 6]] [16, 1024, 1, 1] 0

Flatten-23 [[16, 1024, 1, 1]] [16, 1024] 0

Linear-35 [[16, 1024]] [16, 2] 2,050

===============================================================================

Total params: 7,049,538

Trainable params: 6,882,498

Non-trainable params: 167,040

-------------------------------------------------------------------------------

Input size (MB): 9.19

Forward/backward pass size (MB): 4472.80

Params size (MB): 26.89

Estimated Total Size (MB): 4508.88

-------------------------------------------------------------------------------{'total_params': 7049538, 'trainable_params': 6882498}模型训练

model=paddle.Model(network) lr=paddle.optimizer.lr.CosineAnnealingDecay(learning_rate=lr_base,T_max=epochs) opt=paddle.optimizer.Momentum(learning_rate=lr,parameters=model.parameters(),weight_decay=1e-2) opt=paddle.optimizer.Adam(learning_rate=lr,parameters=model.parameters()) loss=paddle.nn.CrossEntropyLoss()#axis=1model.prepare(opt, loss,metrics=paddle.metric.Accuracy()) model.fit(train_loader, val_loader, epochs=epochs,verbose=2,save_dir="./net_params",log_freq=1)

The loss value printed in the log is the current step, and the metric is the average value of previous steps. Epoch 1/10

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:641: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance.")

step 1/40 - loss: 0.8786 - acc: 0.0000e+00 - 884ms/step step 2/40 - loss: 1.3529 - acc: 0.3750 - 636ms/step step 3/40 - loss: 4.8848 - acc: 0.3333 - 548ms/step step 4/40 - loss: 0.6855 - acc: 0.4375 - 504ms/step step 5/40 - loss: 1.3280 - acc: 0.4500 - 477ms/step step 6/40 - loss: 1.4665 - acc: 0.4583 - 459ms/step step 7/40 - loss: 0.2446 - acc: 0.5000 - 446ms/step step 8/40 - loss: 0.1795 - acc: 0.5625 - 437ms/step step 9/40 - loss: 0.1281 - acc: 0.6111 - 430ms/step step 10/40 - loss: 0.7968 - acc: 0.6250 - 423ms/step step 11/40 - loss: 2.5774 - acc: 0.5682 - 419ms/step step 12/40 - loss: 0.2166 - acc: 0.6042 - 417ms/step step 13/40 - loss: 2.4652 - acc: 0.5577 - 415ms/step step 14/40 - loss: 1.1314 - acc: 0.5357 - 413ms/step step 15/40 - loss: 0.6019 - acc: 0.5500 - 411ms/step step 16/40 - loss: 1.0493 - acc: 0.5469 - 410ms/step step 17/40 - loss: 0.3270 - acc: 0.5588 - 409ms/step step 18/40 - loss: 0.1344 - acc: 0.5833 - 408ms/step step 19/40 - loss: 0.9306 - acc: 0.5921 - 407ms/step step 20/40 - loss: 0.0057 - acc: 0.6125 - 405ms/step step 21/40 - loss: 0.1379 - acc: 0.6310 - 403ms/step step 22/40 - loss: 0.0118 - acc: 0.6477 - 402ms/step step 23/40 - loss: 0.0922 - acc: 0.6630 - 402ms/step step 24/40 - loss: 0.0295 - acc: 0.6771 - 401ms/step step 25/40 - loss: 0.8993 - acc: 0.6700 - 400ms/step step 26/40 - loss: 0.6457 - acc: 0.6731 - 400ms/step step 27/40 - loss: 0.0824 - acc: 0.6852 - 399ms/step step 28/40 - loss: 0.1024 - acc: 0.6964 - 398ms/step step 29/40 - loss: 0.1232 - acc: 0.7069 - 398ms/step step 30/40 - loss: 0.5631 - acc: 0.7083 - 397ms/step step 31/40 - loss: 0.0370 - acc: 0.7177 - 397ms/step step 32/40 - loss: 0.2733 - acc: 0.7188 - 397ms/step step 33/40 - loss: 6.6403 - acc: 0.7045 - 396ms/step step 34/40 - loss: 0.1885 - acc: 0.7132 - 395ms/step step 35/40 - loss: 0.0506 - acc: 0.7214 - 395ms/step step 36/40 - loss: 3.8153 - acc: 0.7222 - 394ms/step step 37/40 - loss: 5.5249 - acc: 0.7162 - 393ms/step step 38/40 - loss: 0.5504 - acc: 0.7171 - 393ms/step step 39/40 - loss: 0.9939 - acc: 0.7179 - 392ms/step step 40/40 - loss: 0.9726 - acc: 0.7188 - 391ms/step save checkpoint at /home/aistudio/net_params/0 Eval begin... step 1/10 - loss: 0.8339 - acc: 0.7500 - 189ms/step step 2/10 - loss: 3.0489 - acc: 0.5000 - 173ms/step step 3/10 - loss: 6.1806 - acc: 0.4167 - 167ms/step step 4/10 - loss: 2.5625 - acc: 0.4375 - 165ms/step step 5/10 - loss: 6.9693 - acc: 0.4500 - 164ms/step step 6/10 - loss: 7.5170 - acc: 0.4167 - 163ms/step step 7/10 - loss: 2.3508 - acc: 0.3929 - 166ms/step step 8/10 - loss: 4.7935 - acc: 0.4375 - 165ms/step step 9/10 - loss: 9.8368 - acc: 0.3889 - 164ms/step step 10/10 - loss: 4.2736 - acc: 0.4000 - 163ms/step Eval samples: 40 Epoch 2/10 step 1/40 - loss: 0.1939 - acc: 1.0000 - 427ms/step step 2/40 - loss: 0.1233 - acc: 1.0000 - 405ms/step step 3/40 - loss: 0.1640 - acc: 1.0000 - 402ms/step step 4/40 - loss: 0.2072 - acc: 1.0000 - 399ms/step step 5/40 - loss: 0.0291 - acc: 1.0000 - 396ms/step step 6/40 - loss: 0.5622 - acc: 0.9583 - 393ms/step step 7/40 - loss: 1.7429 - acc: 0.8929 - 391ms/step step 8/40 - loss: 0.0600 - acc: 0.9062 - 390ms/step step 9/40 - loss: 0.0239 - acc: 0.9167 - 390ms/step step 10/40 - loss: 0.0170 - acc: 0.9250 - 388ms/step step 11/40 - loss: 0.4259 - acc: 0.9091 - 387ms/step step 12/40 - loss: 0.3709 - acc: 0.8958 - 386ms/step step 13/40 - loss: 0.2406 - acc: 0.9038 - 384ms/step step 14/40 - loss: 0.0292 - acc: 0.9107 - 384ms/step step 15/40 - loss: 0.8347 - acc: 0.9000 - 384ms/step step 16/40 - loss: 0.0132 - acc: 0.9062 - 384ms/step step 17/40 - loss: 0.1719 - acc: 0.9118 - 384ms/step step 18/40 - loss: 0.1203 - acc: 0.9167 - 384ms/step step 19/40 - loss: 0.1806 - acc: 0.9211 - 383ms/step step 20/40 - loss: 0.0590 - acc: 0.9250 - 383ms/step step 21/40 - loss: 0.7904 - acc: 0.9167 - 383ms/step step 22/40 - loss: 0.7270 - acc: 0.9091 - 383ms/step step 23/40 - loss: 0.0995 - acc: 0.9130 - 382ms/step step 24/40 - loss: 0.6964 - acc: 0.9062 - 383ms/step step 25/40 - loss: 0.0703 - acc: 0.9100 - 382ms/step step 26/40 - loss: 0.2966 - acc: 0.9038 - 382ms/step step 27/40 - loss: 0.0068 - acc: 0.9074 - 382ms/step step 28/40 - loss: 3.3363 - acc: 0.9018 - 382ms/step step 29/40 - loss: 0.0243 - acc: 0.9052 - 383ms/step step 30/40 - loss: 0.0606 - acc: 0.9083 - 383ms/step step 31/40 - loss: 0.5678 - acc: 0.9032 - 383ms/step step 32/40 - loss: 0.0569 - acc: 0.9062 - 383ms/step step 33/40 - loss: 0.0124124 - acc: 0.9091 - 383ms/step step 34/40 - loss: 0.0041 - acc: 0.9118 - 383ms/step step 35/40 - loss: 0.1410 - acc: 0.9143 - 383ms/step step 36/40 - loss: 0.0177 - acc: 0.9167 - 382ms/step step 37/40 - loss: 0.4238 - acc: 0.9122 - 382ms/step step 38/40 - loss: 0.0226 - acc: 0.9145 - 382ms/step step 39/40 - loss: 0.7144 - acc: 0.9103 - 381ms/step step 40/40 - loss: 0.0323 - acc: 0.9125 - 381ms/step save checkpoint at /home/aistudio/net_params/1 Eval begin... step 1/10 - loss: 1.5320 - acc: 0.7500 - 190ms/step step 2/10 - loss: 0.0654 - acc: 0.8750 - 173ms/step step 3/10 - loss: 0.1166 - acc: 0.9167 - 167ms/step step 4/10 - loss: 0.0883 - acc: 0.9375 - 164ms/step step 5/10 - loss: 0.0637 - acc: 0.9500 - 162ms/step step 6/10 - loss: 0.7424 - acc: 0.9167 - 160ms/step step 7/10 - loss: 0.1827 - acc: 0.9286 - 159ms/step step 8/10 - loss: 0.0102 - acc: 0.9375 - 159ms/step step 9/10 - loss: 8.4945e-04 - acc: 0.9444 - 159ms/step step 10/10 - loss: 0.0039 - acc: 0.9500 - 158ms/step Eval samples: 40 Epoch 3/10 step 1/40 - loss: 0.0395 - acc: 1.0000 - 401ms/step step 2/40 - loss: 0.1322 - acc: 1.0000 - 388ms/step step 3/40 - loss: 0.0062 - acc: 1.0000 - 386ms/step step 4/40 - loss: 0.0462 - acc: 1.0000 - 387ms/step step 5/40 - loss: 1.5052 - acc: 0.9000 - 386ms/step step 6/40 - loss: 0.0362 - acc: 0.9167 - 384ms/step step 7/40 - loss: 0.1628 - acc: 0.9286 - 383ms/step step 8/40 - loss: 0.1661 - acc: 0.9375 - 383ms/step step 9/40 - loss: 1.4469 - acc: 0.9167 - 382ms/step step 10/40 - loss: 0.4381 - acc: 0.9000 - 382ms/step step 11/40 - loss: 0.4622 - acc: 0.8864 - 381ms/step step 12/40 - loss: 1.9192 - acc: 0.8542 - 380ms/step step 13/40 - loss: 0.1092 - acc: 0.8654 - 380ms/step step 14/40 - loss: 0.0249 - acc: 0.8750 - 380ms/step step 15/40 - loss: 0.1569 - acc: 0.8833 - 381ms/step step 16/40 - loss: 0.6889 - acc: 0.8750 - 381ms/step step 17/40 - loss: 1.1773 - acc: 0.8676 - 384ms/step step 18/40 - loss: 0.1330 - acc: 0.8750 - 384ms/step step 19/40 - loss: 0.0544 - acc: 0.8816 - 383ms/step step 20/40 - loss: 0.1582 - acc: 0.8875 - 383ms/step step 21/40 - loss: 0.1629 - acc: 0.8929 - 383ms/step step 22/40 - loss: 0.2393 - acc: 0.8864 - 382ms/step step 23/40 - loss: 0.2353 - acc: 0.8913 - 382ms/step step 24/40 - loss: 0.1751 - acc: 0.8958 - 382ms/step step 25/40 - loss: 0.0879 - acc: 0.9000 - 382ms/step step 26/40 - loss: 0.0663 - acc: 0.9038 - 382ms/step step 27/40 - loss: 1.2867 - acc: 0.8981 - 382ms/step step 28/40 - loss: 0.0812 - acc: 0.9018 - 382ms/step step 29/40 - loss: 0.0434 - acc: 0.9052 - 382ms/step step 30/40 - loss: 0.9762 - acc: 0.9000 - 382ms/step step 31/40 - loss: 0.9838 - acc: 0.8952 - 382ms/step step 32/40 - loss: 0.0421 - acc: 0.8984 - 382ms/step step 33/40 - loss: 0.0318 - acc: 0.9015 - 382ms/step step 34/40 - loss: 0.0415 - acc: 0.9044 - 382ms/step step 35/40 - loss: 0.7238 - acc: 0.9000 - 382ms/step step 36/40 - loss: 0.0645 - acc: 0.9028 - 382ms/step step 37/40 - loss: 0.2908 - acc: 0.8986 - 382ms/step step 38/40 - loss: 0.0585 - acc: 0.9013 - 381ms/step step 39/40 - loss: 0.1431 - acc: 0.9038 - 381ms/step step 40/40 - loss: 0.2628 - acc: 0.9062 - 381ms/step save checkpoint at /home/aistudio/net_params/2 Eval begin... step 1/10 - loss: 0.6657 - acc: 0.7500 - 189ms/step step 2/10 - loss: 0.2851 - acc: 0.8750 - 173ms/step step 3/10 - loss: 0.1932 - acc: 0.9167 - 168ms/step step 4/10 - loss: 0.1568 - acc: 0.9375 - 165ms/step step 5/10 - loss: 0.2661 - acc: 0.9500 - 163ms/step step 6/10 - loss: 0.3660 - acc: 0.9167 - 162ms/step step 7/10 - loss: 0.1076 - acc: 0.9286 - 161ms/step step 8/10 - loss: 0.3483 - acc: 0.9062 - 160ms/step step 9/10 - loss: 0.0626 - acc: 0.9167 - 159ms/step step 10/10 - loss: 2.5330 - acc: 0.9000 - 158ms/step Eval samples: 40 Epoch 4/10 step 1/40 - loss: 0.0737 - acc: 1.0000 - 410ms/step step 2/40 - loss: 0.1885 - acc: 1.0000 - 393ms/step step 3/40 - loss: 0.2421 - acc: 1.0000 - 388ms/step step 4/40 - loss: 0.3262 - acc: 1.0000 - 388ms/step step 5/40 - loss: 0.1046 - acc: 1.0000 - 387ms/step step 6/40 - loss: 0.3956 - acc: 0.9583 - 386ms/step step 7/40 - loss: 0.0820 - acc: 0.9643 - 384ms/step step 8/40 - loss: 0.0214 - acc: 0.9688 - 383ms/step step 9/40 - loss: 2.7114 - acc: 0.9167 - 384ms/step step 10/40 - loss: 0.3407 - acc: 0.9000 - 383ms/step step 11/40 - loss: 0.1124124 - acc: 0.9091 - 383ms/step step 12/40 - loss: 0.0579 - acc: 0.9167 - 383ms/step step 13/40 - loss: 2.1480 - acc: 0.8846 - 383ms/step step 14/40 - loss: 1.6564 - acc: 0.8393 - 385ms/step step 15/40 - loss: 2.2699 - acc: 0.8167 - 390ms/step step 16/40 - loss: 0.0320 - acc: 0.8281 - 390ms/step step 17/40 - loss: 0.0675 - acc: 0.8382 - 390ms/step step 18/40 - loss: 0.7838 - acc: 0.8333 - 391ms/step step 19/40 - loss: 0.8567 - acc: 0.8289 - 391ms/step step 20/40 - loss: 1.2302 - acc: 0.8125 - 392ms/step step 21/40 - loss: 0.8249 - acc: 0.8095 - 392ms/step step 22/40 - loss: 0.0762 - acc: 0.8182 - 391ms/step step 23/40 - loss: 0.6335 - acc: 0.8152 - 391ms/step step 24/40 - loss: 1.4432 - acc: 0.8125 - 390ms/step step 25/40 - loss: 0.3524 - acc: 0.8100 - 390ms/step step 26/40 - loss: 0.0775 - acc: 0.8173 - 389ms/step step 27/40 - loss: 0.0042 - acc: 0.8241 - 389ms/step step 28/40 - loss: 0.4740 - acc: 0.8214 - 389ms/step step 29/40 - loss: 0.0156 - acc: 0.8276 - 389ms/step step 30/40 - loss: 0.7265 - acc: 0.8167 - 389ms/step step 31/40 - loss: 0.0151 - acc: 0.8226 - 389ms/step step 32/40 - loss: 2.1609 - acc: 0.8047 - 389ms/step step 33/40 - loss: 0.7140 - acc: 0.7955 - 388ms/step step 34/40 - loss: 0.9281 - acc: 0.7794 - 388ms/step step 35/40 - loss: 0.3517 - acc: 0.7786 - 388ms/step step 36/40 - loss: 0.2083 - acc: 0.7778 - 387ms/step step 37/40 - loss: 0.0585 - acc: 0.7838 - 387ms/step step 38/40 - loss: 0.0416 - acc: 0.7895 - 386ms/step step 39/40 - loss: 0.0436 - acc: 0.7949 - 386ms/step step 40/40 - loss: 0.2235 - acc: 0.7937 - 386ms/step save checkpoint at /home/aistudio/net_params/3 Eval begin... step 1/10 - loss: 0.4787 - acc: 0.5000 - 193ms/step step 2/10 - loss: 0.2900 - acc: 0.7500 - 176ms/step step 3/10 - loss: 0.3595 - acc: 0.7500 - 169ms/step step 4/10 - loss: 1.2558 - acc: 0.7500 - 166ms/step step 5/10 - loss: 0.1971 - acc: 0.8000 - 164ms/step step 6/10 - loss: 0.2971 - acc: 0.7917 - 162ms/step step 7/10 - loss: 0.3777 - acc: 0.7857 - 160ms/step step 8/10 - loss: 0.3725 - acc: 0.8125 - 159ms/step step 9/10 - loss: 0.0464 - acc: 0.8333 - 159ms/step step 10/10 - loss: 0.1397 - acc: 0.8500 - 159ms/step Eval samples: 40 Epoch 5/10 step 1/40 - loss: 0.0323 - acc: 1.0000 - 419ms/step step 2/40 - loss: 0.6679 - acc: 0.8750 - 409ms/step step 3/40 - loss: 1.4082 - acc: 0.7500 - 405ms/step step 4/40 - loss: 0.1318 - acc: 0.8125 - 403ms/step step 5/40 - loss: 0.0193 - acc: 0.8500 - 401ms/step step 6/40 - loss: 0.2944 - acc: 0.8333 - 397ms/step step 7/40 - loss: 6.1585 - acc: 0.7857 - 394ms/step step 8/40 - loss: 0.5659 - acc: 0.7500 - 392ms/step step 9/40 - loss: 0.7713 - acc: 0.7500 - 390ms/step step 10/40 - loss: 1.2466 - acc: 0.7250 - 389ms/step step 11/40 - loss: 5.7933 - acc: 0.7045 - 388ms/step step 12/40 - loss: 0.0074 - acc: 0.7292 - 388ms/step step 13/40 - loss: 0.3855 - acc: 0.7308 - 389ms/step step 14/40 - loss: 0.2039 - acc: 0.7500 - 389ms/step step 15/40 - loss: 0.1062 - acc: 0.7667 - 388ms/step step 16/40 - loss: 0.0897 - acc: 0.7812 - 388ms/step step 17/40 - loss: 0.0531 - acc: 0.7941 - 388ms/step step 18/40 - loss: 0.0057 - acc: 0.8056 - 387ms/step step 19/40 - loss: 3.8333 - acc: 0.7763 - 387ms/step step 20/40 - loss: 9.8705e-04 - acc: 0.7875 - 387ms/step step 21/40 - loss: 0.0078 - acc: 0.7976 - 387ms/step step 22/40 - loss: 0.1706 - acc: 0.8068 - 387ms/step step 23/40 - loss: 0.0057 - acc: 0.8152 - 387ms/step step 24/40 - loss: 0.7214 - acc: 0.8125 - 387ms/step step 25/40 - loss: 2.2940 - acc: 0.8000 - 387ms/step step 26/40 - loss: 0.1119 - acc: 0.8077 - 387ms/step step 27/40 - loss: 0.1626 - acc: 0.8148 - 387ms/step step 28/40 - loss: 0.1642 - acc: 0.8214 - 387ms/step step 29/40 - loss: 1.4720 - acc: 0.8190 - 386ms/step step 30/40 - loss: 0.1344 - acc: 0.8250 - 386ms/step step 31/40 - loss: 0.0357 - acc: 0.8306 - 386ms/step step 32/40 - loss: 0.9776 - acc: 0.8281 - 386ms/step step 33/40 - loss: 0.0590 - acc: 0.8333 - 385ms/step step 34/40 - loss: 0.0067 - acc: 0.8382 - 385ms/step step 35/40 - loss: 0.0716 - acc: 0.8429 - 385ms/step step 36/40 - loss: 1.0589 - acc: 0.8403 - 385ms/step step 37/40 - loss: 1.0528 - acc: 0.8243 - 385ms/step step 38/40 - loss: 0.1098 - acc: 0.8289 - 385ms/step step 39/40 - loss: 1.0890 - acc: 0.8141 - 385ms/step step 40/40 - loss: 0.0311 - acc: 0.8187 - 385ms/step save checkpoint at /home/aistudio/net_params/4 Eval begin... step 1/10 - loss: 0.6312 - acc: 0.7500 - 190ms/step step 2/10 - loss: 0.0878 - acc: 0.8750 - 174ms/step step 3/10 - loss: 0.3535 - acc: 0.8333 - 167ms/step step 4/10 - loss: 0.6739 - acc: 0.8125 - 164ms/step step 5/10 - loss: 0.4551 - acc: 0.8000 - 162ms/step step 6/10 - loss: 0.2485 - acc: 0.8333 - 161ms/step step 7/10 - loss: 0.0581 - acc: 0.8571 - 159ms/step step 8/10 - loss: 1.1049 - acc: 0.8438 - 158ms/step step 9/10 - loss: 0.7219 - acc: 0.8333 - 157ms/step step 10/10 - loss: 0.0683 - acc: 0.8500 - 157ms/step Eval samples: 40 Epoch 6/10 step 1/40 - loss: 0.0995 - acc: 1.0000 - 409ms/step step 2/40 - loss: 0.0347 - acc: 1.0000 - 393ms/step step 3/40 - loss: 0.0175 - acc: 1.0000 - 387ms/step step 4/40 - loss: 0.0142 - acc: 1.0000 - 384ms/step step 5/40 - loss: 0.0089 - acc: 1.0000 - 382ms/step step 6/40 - loss: 0.0130 - acc: 1.0000 - 382ms/step step 7/40 - loss: 0.0065 - acc: 1.0000 - 381ms/step step 8/40 - loss: 0.0886 - acc: 1.0000 - 381ms/step step 9/40 - loss: 0.0057 - acc: 1.0000 - 382ms/step step 10/40 - loss: 0.4009 - acc: 0.9750 - 383ms/step step 11/40 - loss: 0.0063 - acc: 0.9773 - 383ms/step step 12/40 - loss: 0.1222 - acc: 0.9792 - 385ms/step step 13/40 - loss: 0.2443 - acc: 0.9615 - 384ms/step step 14/40 - loss: 0.0042 - acc: 0.9643 - 383ms/step step 15/40 - loss: 0.1865 - acc: 0.9500 - 383ms/step step 16/40 - loss: 0.2686 - acc: 0.9375 - 384ms/step step 17/40 - loss: 0.0906 - acc: 0.9412 - 384ms/step step 18/40 - loss: 2.0408 - acc: 0.9028 - 383ms/step step 19/40 - loss: 2.7789 - acc: 0.8816 - 383ms/step step 20/40 - loss: 1.5114 - acc: 0.8625 - 382ms/step step 21/40 - loss: 0.0674 - acc: 0.8690 - 382ms/step step 22/40 - loss: 0.2582 - acc: 0.8750 - 382ms/step step 23/40 - loss: 0.2616 - acc: 0.8804 - 382ms/step step 24/40 - loss: 0.9854 - acc: 0.8750 - 382ms/step step 25/40 - loss: 0.5641 - acc: 0.8700 - 383ms/step step 26/40 - loss: 0.2137 - acc: 0.8654 - 383ms/step step 27/40 - loss: 1.1425 - acc: 0.8519 - 383ms/step step 28/40 - loss: 2.1342 - acc: 0.8214 - 383ms/step step 29/40 - loss: 0.1785 - acc: 0.8276 - 383ms/step step 30/40 - loss: 0.1765 - acc: 0.8333 - 383ms/step step 31/40 - loss: 0.3265 - acc: 0.8306 - 383ms/step step 32/40 - loss: 0.1236 - acc: 0.8359 - 383ms/step step 33/40 - loss: 0.0458 - acc: 0.8409 - 382ms/step step 34/40 - loss: 0.1007 - acc: 0.8456 - 383ms/step step 35/40 - loss: 0.0287 - acc: 0.8500 - 382ms/step step 36/40 - loss: 0.6687 - acc: 0.8403 - 383ms/step step 37/40 - loss: 0.1288 - acc: 0.8446 - 383ms/step step 38/40 - loss: 0.3275 - acc: 0.8421 - 383ms/step step 39/40 - loss: 0.0299 - acc: 0.8462 - 383ms/step step 40/40 - loss: 0.0082 - acc: 0.8500 - 382ms/step save checkpoint at /home/aistudio/net_params/5 Eval begin... step 1/10 - loss: 0.6713 - acc: 0.7500 - 190ms/step step 2/10 - loss: 0.0364 - acc: 0.8750 - 173ms/step step 3/10 - loss: 0.1046 - acc: 0.9167 - 167ms/step step 4/10 - loss: 0.0863 - acc: 0.9375 - 164ms/step step 5/10 - loss: 0.0156 - acc: 0.9500 - 162ms/step step 6/10 - loss: 0.3814 - acc: 0.9167 - 160ms/step step 7/10 - loss: 0.1301 - acc: 0.9286 - 159ms/step step 8/10 - loss: 0.1887 - acc: 0.9375 - 158ms/step step 9/10 - loss: 5.1071e-04 - acc: 0.9444 - 157ms/step step 10/10 - loss: 0.0056 - acc: 0.9500 - 156ms/step Eval samples: 40 Epoch 7/10 step 1/40 - loss: 0.0386 - acc: 1.0000 - 405ms/step step 2/40 - loss: 0.0222 - acc: 1.0000 - 390ms/step step 3/40 - loss: 0.0136 - acc: 1.0000 - 385ms/step step 4/40 - loss: 0.0808 - acc: 1.0000 - 383ms/step step 5/40 - loss: 0.2800 - acc: 0.9500 - 382ms/step step 6/40 - loss: 3.6075 - acc: 0.8750 - 382ms/step step 7/40 - loss: 0.0278 - acc: 0.8929 - 381ms/step step 8/40 - loss: 0.2057 - acc: 0.9062 - 382ms/step step 9/40 - loss: 0.0269 - acc: 0.9167 - 383ms/step step 10/40 - loss: 0.0028 - acc: 0.9250 - 384ms/step step 11/40 - loss: 0.7834 - acc: 0.9091 - 384ms/step step 12/40 - loss: 0.5458 - acc: 0.8958 - 384ms/step step 13/40 - loss: 0.1274 - acc: 0.9038 - 383ms/step step 14/40 - loss: 0.0356 - acc: 0.9107 - 383ms/step step 15/40 - loss: 0.0309 - acc: 0.9167 - 383ms/step step 16/40 - loss: 0.0779 - acc: 0.9219 - 384ms/step step 17/40 - loss: 0.2377 - acc: 0.9118 - 384ms/step step 18/40 - loss: 0.0049 - acc: 0.9167 - 385ms/step step 19/40 - loss: 0.2741 - acc: 0.9079 - 386ms/step step 20/40 - loss: 0.0322 - acc: 0.9125 - 386ms/step step 21/40 - loss: 0.7266 - acc: 0.9048 - 387ms/step step 22/40 - loss: 0.0301 - acc: 0.9091 - 387ms/step step 23/40 - loss: 0.0418 - acc: 0.9130 - 390ms/step step 24/40 - loss: 0.0183 - acc: 0.9167 - 390ms/step step 25/40 - loss: 0.0116 - acc: 0.9200 - 389ms/step step 26/40 - loss: 0.0014 - acc: 0.9231 - 390ms/step step 27/40 - loss: 0.0010 - acc: 0.9259 - 389ms/step step 28/40 - loss: 0.0080 - acc: 0.9286 - 389ms/step step 29/40 - loss: 0.8775 - acc: 0.9224 - 389ms/step step 30/40 - loss: 1.5853 - acc: 0.9167 - 389ms/step step 31/40 - loss: 0.0105 - acc: 0.9194 - 389ms/step step 32/40 - loss: 0.1602 - acc: 0.9219 - 389ms/step step 33/40 - loss: 0.0230 - acc: 0.9242 - 388ms/step step 34/40 - loss: 0.0125 - acc: 0.9265 - 388ms/step step 35/40 - loss: 0.9842 - acc: 0.9214 - 388ms/step step 36/40 - loss: 0.3961 - acc: 0.9167 - 388ms/step step 37/40 - loss: 0.0035 - acc: 0.9189 - 388ms/step step 38/40 - loss: 0.0021 - acc: 0.9211 - 388ms/step step 39/40 - loss: 0.0378 - acc: 0.9231 - 388ms/step step 40/40 - loss: 0.0681 - acc: 0.9250 - 388ms/step save checkpoint at /home/aistudio/net_params/6 Eval begin... step 1/10 - loss: 1.1088 - acc: 0.7500 - 191ms/step step 2/10 - loss: 0.0657 - acc: 0.8750 - 175ms/step step 3/10 - loss: 0.3504 - acc: 0.8333 - 169ms/step step 4/10 - loss: 0.3822 - acc: 0.8125 - 166ms/step step 5/10 - loss: 0.0095 - acc: 0.8500 - 164ms/step step 6/10 - loss: 0.1725 - acc: 0.8750 - 163ms/step step 7/10 - loss: 0.0494 - acc: 0.8929 - 162ms/step step 8/10 - loss: 0.0705 - acc: 0.9062 - 162ms/step step 9/10 - loss: 0.0017 - acc: 0.9167 - 162ms/step step 10/10 - loss: 0.1170 - acc: 0.9250 - 161ms/step Eval samples: 40 Epoch 8/10 step 1/40 - loss: 0.0471 - acc: 1.0000 - 414ms/step step 2/40 - loss: 0.1036 - acc: 1.0000 - 405ms/step step 3/40 - loss: 0.0255 - acc: 1.0000 - 398ms/step step 4/40 - loss: 0.0952 - acc: 1.0000 - 396ms/step step 5/40 - loss: 0.0220 - acc: 1.0000 - 392ms/step step 6/40 - loss: 0.0714 - acc: 1.0000 - 390ms/step step 7/40 - loss: 0.1415 - acc: 1.0000 - 388ms/step step 8/40 - loss: 0.0573 - acc: 1.0000 - 387ms/step step 9/40 - loss: 0.4687 - acc: 0.9722 - 388ms/step step 10/40 - loss: 0.0601 - acc: 0.9750 - 388ms/step step 11/40 - loss: 3.3628 - acc: 0.9318 - 388ms/step step 12/40 - loss: 0.1056 - acc: 0.9375 - 387ms/step step 13/40 - loss: 0.0047 - acc: 0.9423 - 387ms/step step 14/40 - loss: 0.0695 - acc: 0.9464 - 386ms/step step 15/40 - loss: 0.0321 - acc: 0.9500 - 385ms/step step 16/40 - loss: 0.0046 - acc: 0.9531 - 385ms/step step 17/40 - loss: 0.2005 - acc: 0.9559 - 385ms/step step 18/40 - loss: 0.0053 - acc: 0.9583 - 384ms/step step 19/40 - loss: 2.9423 - acc: 0.9342 - 384ms/step step 20/40 - loss: 0.0326 - acc: 0.9375 - 384ms/step step 21/40 - loss: 0.0439 - acc: 0.9405 - 384ms/step step 22/40 - loss: 0.3001 - acc: 0.9318 - 386ms/step step 23/40 - loss: 0.4708 - acc: 0.9239 - 386ms/step step 24/40 - loss: 0.1299 - acc: 0.9271 - 386ms/step step 25/40 - loss: 0.3625 - acc: 0.9200 - 385ms/step step 26/40 - loss: 0.3287 - acc: 0.9135 - 385ms/step step 27/40 - loss: 0.0549 - acc: 0.9167 - 385ms/step step 28/40 - loss: 0.3235 - acc: 0.9107 - 384ms/step step 29/40 - loss: 0.0694 - acc: 0.9138 - 384ms/step step 30/40 - loss: 0.0509 - acc: 0.9167 - 384ms/step step 31/40 - loss: 0.0484 - acc: 0.9194 - 384ms/step step 32/40 - loss: 0.0848 - acc: 0.9219 - 384ms/step step 33/40 - loss: 0.8888 - acc: 0.9091 - 384ms/step step 34/40 - loss: 0.1689 - acc: 0.9118 - 383ms/step step 35/40 - loss: 0.1079 - acc: 0.9143 - 384ms/step step 36/40 - loss: 1.2262 - acc: 0.8958 - 384ms/step step 37/40 - loss: 0.0499 - acc: 0.8986 - 384ms/step step 38/40 - loss: 0.0687 - acc: 0.9013 - 383ms/step step 39/40 - loss: 0.1622 - acc: 0.9038 - 383ms/step step 40/40 - loss: 1.3731 - acc: 0.8938 - 383ms/step save checkpoint at /home/aistudio/net_params/7 Eval begin... step 1/10 - loss: 0.6370 - acc: 0.7500 - 189ms/step step 2/10 - loss: 0.1336 - acc: 0.8750 - 173ms/step step 3/10 - loss: 0.2266 - acc: 0.8333 - 166ms/step step 4/10 - loss: 0.6489 - acc: 0.8125 - 163ms/step step 5/10 - loss: 0.0253 - acc: 0.8500 - 161ms/step step 6/10 - loss: 0.1293 - acc: 0.8750 - 160ms/step step 7/10 - loss: 0.1190 - acc: 0.8929 - 158ms/step step 8/10 - loss: 0.1791 - acc: 0.9062 - 157ms/step step 9/10 - loss: 0.0681 - acc: 0.9167 - 157ms/step step 10/10 - loss: 0.2486 - acc: 0.9000 - 156ms/step Eval samples: 40 Epoch 9/10 step 1/40 - loss: 0.1473 - acc: 1.0000 - 412ms/step step 2/40 - loss: 0.0333 - acc: 1.0000 - 395ms/step step 3/40 - loss: 0.1590 - acc: 1.0000 - 392ms/step step 4/40 - loss: 0.1155 - acc: 1.0000 - 388ms/step step 5/40 - loss: 0.1285 - acc: 1.0000 - 385ms/step step 6/40 - loss: 0.0225 - acc: 1.0000 - 383ms/step step 7/40 - loss: 0.0925 - acc: 1.0000 - 382ms/step step 8/40 - loss: 0.0555 - acc: 1.0000 - 383ms/step step 9/40 - loss: 0.1942 - acc: 1.0000 - 383ms/step step 10/40 - loss: 0.1221 - acc: 1.0000 - 385ms/step step 11/40 - loss: 0.1720 - acc: 1.0000 - 385ms/step step 12/40 - loss: 0.1029 - acc: 1.0000 - 385ms/step step 13/40 - loss: 0.3487 - acc: 0.9808 - 385ms/step step 14/40 - loss: 0.0366 - acc: 0.9821 - 384ms/step step 15/40 - loss: 0.1332 - acc: 0.9833 - 384ms/step step 16/40 - loss: 0.1053 - acc: 0.9844 - 383ms/step step 17/40 - loss: 0.8791 - acc: 0.9706 - 384ms/step step 18/40 - loss: 0.0092 - acc: 0.9722 - 383ms/step step 19/40 - loss: 0.1165 - acc: 0.9737 - 384ms/step step 20/40 - loss: 0.0231 - acc: 0.9750 - 384ms/step step 21/40 - loss: 0.0517 - acc: 0.9762 - 386ms/step step 22/40 - loss: 1.2441 - acc: 0.9545 - 386ms/step step 23/40 - loss: 0.0475 - acc: 0.9565 - 386ms/step step 24/40 - loss: 0.0250 - acc: 0.9583 - 385ms/step step 25/40 - loss: 1.8250 - acc: 0.9300 - 385ms/step step 26/40 - loss: 1.5704 - acc: 0.9135 - 385ms/step step 27/40 - loss: 0.0336 - acc: 0.9167 - 385ms/step step 28/40 - loss: 0.1095 - acc: 0.9196 - 385ms/step step 29/40 - loss: 0.0183 - acc: 0.9224 - 385ms/step step 30/40 - loss: 1.0537 - acc: 0.9000 - 384ms/step step 31/40 - loss: 0.0366 - acc: 0.9032 - 384ms/step step 32/40 - loss: 0.3328 - acc: 0.8984 - 384ms/step step 33/40 - loss: 1.4123 - acc: 0.8864 - 384ms/step step 34/40 - loss: 0.0306 - acc: 0.8897 - 384ms/step step 35/40 - loss: 0.1041 - acc: 0.8929 - 384ms/step step 36/40 - loss: 0.2017 - acc: 0.8889 - 384ms/step step 37/40 - loss: 0.0764 - acc: 0.8919 - 384ms/step step 38/40 - loss: 0.4936 - acc: 0.8816 - 384ms/step step 39/40 - loss: 0.0707 - acc: 0.8846 - 384ms/step step 40/40 - loss: 0.2644 - acc: 0.8812 - 384ms/step save checkpoint at /home/aistudio/net_params/8 Eval begin... step 1/10 - loss: 0.5388 - acc: 0.7500 - 191ms/step step 2/10 - loss: 0.1467 - acc: 0.8750 - 174ms/step step 3/10 - loss: 0.3554 - acc: 0.8333 - 167ms/step step 4/10 - loss: 0.9144 - acc: 0.8125 - 164ms/step step 5/10 - loss: 0.0390 - acc: 0.8500 - 162ms/step step 6/10 - loss: 0.1069 - acc: 0.8750 - 161ms/step step 7/10 - loss: 0.0991 - acc: 0.8929 - 160ms/step step 8/10 - loss: 0.3669 - acc: 0.8750 - 159ms/step step 9/10 - loss: 0.0068 - acc: 0.8889 - 158ms/step step 10/10 - loss: 0.1100 - acc: 0.9000 - 158ms/step Eval samples: 40 Epoch 10/10 step 1/40 - loss: 0.3692 - acc: 0.7500 - 411ms/step step 2/40 - loss: 0.0414 - acc: 0.8750 - 396ms/step step 3/40 - loss: 0.3528 - acc: 0.8333 - 390ms/step step 4/40 - loss: 1.5622 - acc: 0.6875 - 389ms/step step 5/40 - loss: 1.3839 - acc: 0.6500 - 387ms/step step 6/40 - loss: 0.0628 - acc: 0.7083 - 386ms/step step 7/40 - loss: 0.0734 - acc: 0.7500 - 386ms/step step 8/40 - loss: 0.1471 - acc: 0.7812 - 386ms/step step 9/40 - loss: 0.1939 - acc: 0.7778 - 385ms/step step 10/40 - loss: 0.0424 - acc: 0.8000 - 385ms/step step 11/40 - loss: 0.0248 - acc: 0.8182 - 385ms/step step 12/40 - loss: 0.1522 - acc: 0.8333 - 384ms/step step 13/40 - loss: 0.0541 - acc: 0.8462 - 383ms/step step 14/40 - loss: 0.2955 - acc: 0.8571 - 384ms/step step 15/40 - loss: 0.0316 - acc: 0.8667 - 384ms/step step 16/40 - loss: 0.1238 - acc: 0.8750 - 384ms/step step 17/40 - loss: 0.0661 - acc: 0.8824 - 383ms/step step 18/40 - loss: 1.5378 - acc: 0.8472 - 383ms/step step 19/40 - loss: 0.0806 - acc: 0.8553 - 383ms/step step 20/40 - loss: 1.9089 - acc: 0.8250 - 384ms/step step 21/40 - loss: 0.1667 - acc: 0.8333 - 385ms/step step 22/40 - loss: 0.0567 - acc: 0.8409 - 386ms/step step 23/40 - loss: 0.0559 - acc: 0.8478 - 386ms/step step 24/40 - loss: 0.1250 - acc: 0.8542 - 386ms/step step 25/40 - loss: 0.7509 - acc: 0.8300 - 387ms/step step 26/40 - loss: 0.0650 - acc: 0.8365 - 387ms/step step 27/40 - loss: 0.2121 - acc: 0.8426 - 388ms/step step 28/40 - loss: 0.9168 - acc: 0.8304 - 388ms/step step 29/40 - loss: 0.3841 - acc: 0.8276 - 388ms/step step 30/40 - loss: 0.2089 - acc: 0.8333 - 388ms/step step 31/40 - loss: 0.5309 - acc: 0.8306 - 387ms/step step 32/40 - loss: 0.0864 - acc: 0.8359 - 387ms/step step 33/40 - loss: 0.8717 - acc: 0.8258 - 386ms/step step 34/40 - loss: 0.0905 - acc: 0.8309 - 387ms/step step 35/40 - loss: 0.4231 - acc: 0.8214 - 386ms/step step 36/40 - loss: 0.7544 - acc: 0.8125 - 386ms/step step 37/40 - loss: 0.0584 - acc: 0.8176 - 386ms/step step 38/40 - loss: 0.1380 - acc: 0.8224 - 386ms/step step 39/40 - loss: 0.3090 - acc: 0.8269 - 386ms/step step 40/40 - loss: 0.1033 - acc: 0.8313 - 385ms/step save checkpoint at /home/aistudio/net_params/9 Eval begin... step 1/10 - loss: 0.6522 - acc: 0.7500 - 191ms/step step 2/10 - loss: 0.2859 - acc: 0.8750 - 174ms/step step 3/10 - loss: 0.2603 - acc: 0.8333 - 168ms/step step 4/10 - loss: 0.3827 - acc: 0.8125 - 165ms/step step 5/10 - loss: 0.1566 - acc: 0.8500 - 163ms/step step 6/10 - loss: 0.3308 - acc: 0.8333 - 161ms/step step 7/10 - loss: 0.2705 - acc: 0.8571 - 160ms/step step 8/10 - loss: 0.1988 - acc: 0.8750 - 159ms/step step 9/10 - loss: 0.1806 - acc: 0.8889 - 159ms/step step 10/10 - loss: 0.2267 - acc: 0.8750 - 159ms/step Eval samples: 40 save checkpoint at /home/aistudio/net_params/final

模型验证

test_set=DatasetN("test_list.txt",T.Compose([T.Normalize(data_format="CHW")]))

model.load("net_params/final")

test_result=model.predict(test_set)[0]print(np.argmax(test_result,axis=2).flatten())#1 1 0 1 0 0 0 1 0 1Predict begin... step 10/10 [==============================] - 153ms/step Predict samples: 10 [1 1 1 1 0 0 0 1 1 1]

!rm -r net_params/*